In recent years, Artificial Intelligence (AI) has made significant progress in Natural Language Processing (NLP). The recent success of ChatGPT has showcased the power of Large Language Models (LLMs). ChatGPT uses a language model with a staggering 175 billion parameters, requiring a large number of GPUs for high-speed parallel computing, an environment and capability that is difficult for most companies to possess. With the mission of helping Taiwan connect with this AI 2.0 trend, Taizhi Cloud has successfully built a large language model, BLOOM (176 billion parameters), on its Taiwan Sugi-2 AIHPC platform. Taizhi Cloud has launched a one-stop integrated solution, the "AI 2.0 High-Computing Power Consulting Service," which includes AI experts, technical teams, development environment setup, and AIHPC high-computing power resources. Based on the current achievements of Taizhi Cloud's BLOOM, the service helps users immediately launch the "Ready to Go LLM" service, enabling research teams and industries to engage in the research and development of next-generation AI applications and enter the AI 2.0 era.

Chen Zhongcheng, Chief Technology Officer of Taizhiyun, pointed out that the combination of AI technology with Large Language Models (LLM) is a trend in industrial technology development. However, it is extremely difficult for enterprises to execute LLM projects independently. Therefore, we launched a one-stop AI 2.0 high-computing power consulting service, allowing enterprises to focus on the research and development of related projects without any worries. Taizhiyun has always provided a powerful AIHPC platform, which is essential for success. Now, it has combined its technical resources team to provide enterprises with a complete set of external AI support in a brand-new service model. Currently, many enterprises are in talks with Taizhiyun about related cooperation.

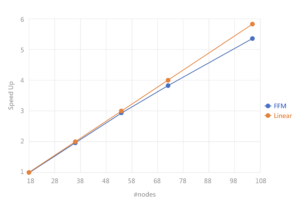

Taking the BLOOM large model completed by Taizhiyun as an example, the dataset contains 46 human languages and 13 programming languages, with 176 billion parameters and a total data volume of over 1.5TB. It uses 840 GPUs for cross-node training. In addition to the performance approaching the linear theoretical value, the training results can also converge, and GPUs can be added for parallel computing as needed to scale out in a linear manner.

The BLOOM large model's execution results on the AIHPC platform deliver near-perfect high performance with linear cross-node performance.

For enterprises to independently drive LLM projects, they must overcome five major hurdles: familiarity with distributed training techniques for large-scale models, providing high computing power requirements (Petaflop/s-day (pfs-day)), understanding fine-tuning techniques, large-scale model inference, and possessing a high-performance AIHPC environment.likePartnering with Taizhi Cloud easily overcomes the aforementioned challenges. First, Taizhi Cloud's AIHPC platform utilizes world-class supercomputers for high-performance computing and is among the top 100 green computing platforms globally. It boasts 2016 GPU chips connected via NVIDIA NVLink technology, achieving a computing power of 9 PFLOPS. Combined with the AIHPC platform's parallel file processing system and InfiniBand ultra-high-speed network technology, it is the optimal choice for executing large LLM model computations such as Bloom. Second, Taizhi Cloud has completed the Bloom environment setup and optimization, and its technical team is highly experienced in distributed training of large models, saving enterprises on GPU usage costs and model training time. Furthermore, Taizhi Cloud's AIHPC platform possesses a comprehensive security protection mechanism, covering data security, network security, APT protection, DDoS mitigation, and security management. It also has an established information security maintenance center to protect the security of data and application services on the platform, making it the best choice for enterprises or research institutions launching LLM projects.

● Learn about the "AI 2.0 High-Performance Computing Consulting Service":https://tws.twcc.ai/ai-llm