“This is not merely a technical issue; it is fundamentally about rebuilding social trust. Without trust mechanisms to support it, the evolution of AI will ultimately be like building a tower on sand—no matter how high it rises, it cannot endure.”

I. From an Efficiency-Driven Era to a Trust-Driven Turning Point

Over the past decade, competition in AI has centered on a single question: whose model is stronger?

Nations and corporations have poured massive investments into training ever-larger models and building more powerful GPU clusters in pursuit of speed and accuracy. However, as AI begins to truly enter the public domain—healthcare, education, and urban governance—it has become clear that the real challenge is no longer whether AI can compute, but whether it can be trusted.

Consider a medical AI that misdiagnoses a condition: who bears responsibility? Or a self-driving AI that causes an accident due to biased data—should liability rest with the company, the developer, or the data provider? AI is crossing the boundary from technological innovation into the realms of ethics and governance. As a result, society is gradually shifting from an efficiency-driven paradigm toward a new trust-driven era.

II. The Three Layers of AI Trust: Data, Systems, and Governance

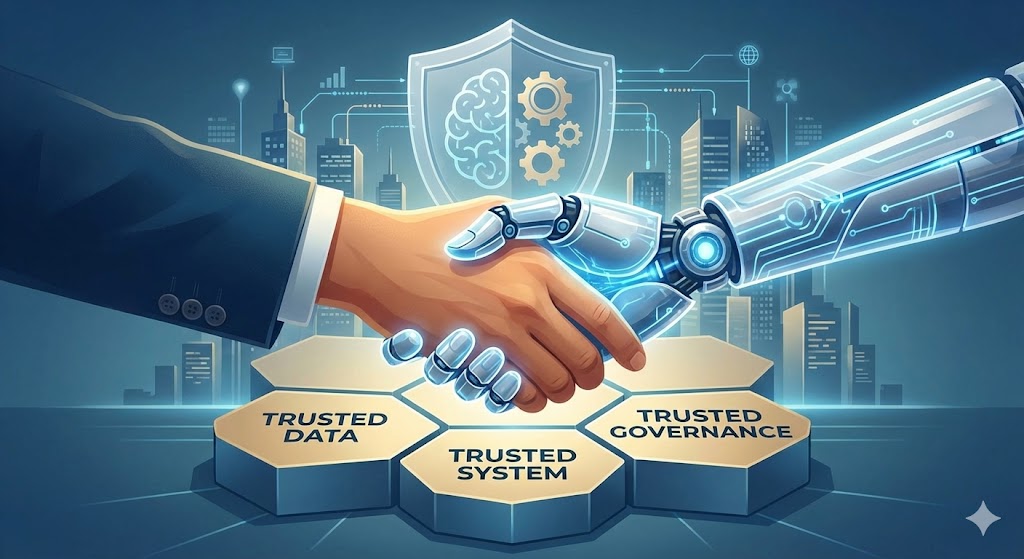

Trust in AI rests on three foundational pillars: trusted data, trusted systems, and trusted governance.

1. Trusted Data

Data is the fuel of AI. If data is contaminated, biased, or collected without clear authorization, even the most sophisticated AI outputs will fail to earn public confidence.

Data governance therefore marks the starting point of AI trust. Practices such as data lineage tracking and conditional consent ensure that every dataset used by AI systems is traceable, auditable, and obtained with proper authorization. This level of transparency is a prerequisite for societies to be willing to open data resources and share collective intelligence.

2. Trusted Systems

AI systems must be understandable and verifiable. Explainability allows humans to understand why an AI reaches certain conclusions; security ensures systems are not maliciously manipulated; and reliability guarantees consistent performance across different scenarios.

Only when AI moves from a “black box” to auditable intelligence can trust truly take root.

3. Trusted Governance

Beyond technology, institutions matter. AI ethics committees, clearly defined accountability for models, and regulatory frameworks for cross-domain data exchange all belong to the governance layer of trust.

Governments and enterprises must work together to establish principles of transparency and auditability so that AI development is not merely legal, but genuinely accountable. This institutionalized trust acts as the fuse that allows AI to integrate safely into the fabric of society.

III. From Individual Trust to Collective Trust: The Implications of Sovereign AI

In the global AI landscape, computing power and data are increasingly concentrated in the hands of a few multinational giants. For small and medium-sized countries, this concentration represents a serious risk: AI dependence may evolve into the loss of data sovereignty and even cognitive sovereignty.

This is why the concept of Sovereign AI has emerged. It emphasizes a nation’s need to possess its own AI computing infrastructure, model development capabilities, and trusted data spaces—ensuring that local data remains local, algorithms remain controllable, and applications remain trustworthy. This is not merely a technological race, but a competition in trust infrastructure. Whoever succeeds in building a trustworthy AI ecosystem will secure the foundation of future digital sovereignty.

IV. Trust as the New Competitive Advantage in the AI Era

As AI penetrates every layer of social operation, trust is no longer a secondary condition—it has become a new form of economic capital.

Enterprises that offer auditable AI APIs, data-sovereign cloud platforms, or Trust as a Service models will gain durable advantages across the AI value chain. For governments, the adoption of trustworthy AI will determine public acceptance of AI-enabled governance. For businesses, trust becomes a core element of brand competitiveness. For individuals, trust is the foundation for coexistence with AI.

In the AI era, trust is no longer merely a belief—it is a capability that can be designed, verified, and quantified.

V. Conclusion: Making AI Worthy of Trust

AI development should not come at the expense of trust. True progress lies in achieving a new balance between efficiency and trust. Trust is not a brake on AI innovation—it is an accelerator. It encourages data sharing, AI adoption, and collective participation in AI evolution. When we succeed in building a full-stack trust architecture—from technology to institutions, from enterprises to nations, and from individuals to society—AI will become not just a tool for humanity, but a partner in trust.

Making AI worthy of trust also makes humanity’s future worth believing in.

Source: Business Today