Taiwan's first enterprise-grade GenAI model with enhanced Traditional Chinese corpus

A more localized, more precise, and smarter Formosa Foundation Models

Traditional Chinese Enhanced Open Source Language/Multimodal Model

Taiwan's leading company, Taiwan AI Cloud, was the first to release a large-scale Traditional Chinese language model—the "Formosa Foundation Models." The FFM model series, from BLOOM and Mistral to the latest Llama model, provides various open-source models with enhanced Traditional Chinese versions and added features.

In terms of model specifications, we also provide the most complete parameter specifications for each model, including various 8B, 70B, 405B and other massive parameter models as well as MoE architecture models. To meet the rapidly changing application needs of GenAI, we promptly provide the most complete and diverse model specifications and application services, accelerating the implementation of AI 2.0 in enterprises.

FFM's Six Major Advantages: The Most Complete Commercial Model Service

The only provider of cloud-based commercial licenses to meet diverse enterprise needs.

| FFM | Cloud Deployment - AI Foundry Service | Ground Deployment - FFM Ground Authorization |

|---|---|---|

| Models available | FFM Model Series Various open source models | FFM Model Series Continuously update the optimal model |

| use | AFS ModelSpace Model Inference Service AFS Platform + Cloud Model Optimization Service | Model training + Model inference |

| Billing method | Billing is based on creation time or the number of tokens used. | License fees are charged annually. |

| Support inference methods | Chat UI / Playground / API | API |

*For a complete list of licensed models and corresponding hardware specifications, please see [link/reference].FFM Local Authorization List

FFM provides the latest and most complete model specifications.

⭐Latest and most complete model specifications: Select excellent open-source models for Traditional Chinese reinforcement training, with various parameter specifications to choose from. ⭐Enhanced Tool Call: Faster and more accurate tool concatenation and selection, significantly expanding the application scenarios of the model. ⭐OpenAI API Compatibility: Interacts with FFM models using the OpenAI API, seamlessly switching between Traditional Chinese and Chinese models.

| Multimodal model | Parameters | Context Length | Model Traditional Chinese Capability | Model Evaluation |

|---|---|---|---|---|

| ⭐Llama3.2-FFM | 11B | 32K | OCR, image understanding, and accuracy have been greatly improved. | Multiple evaluations show that it outperforms the native model |

| Large Language Model | Parameters | Context Length | Expanded vocabulary | Tool calling capability | Model Evaluation (TMMLU+) |

|---|---|---|---|---|---|

| ⭐Llama 3.3-FFM | 70B | 32K | Same as native | Enhance Tool Call Accuracy rate up to 94% | 70B surpasses GPT4.0 |

| Llama 3.1-FFM | 405B / 70B / 8B | 32K | Same as native | Enhance Tool Call | 70B surpasses GPT4.0 |

| Llama3-FFM | 70B / 8B | 8K | Same as native | Enhance Tool Call | 70B surpasses GPT4.0 |

| FFM-Mixtral-8x7B | MoE 8x7B | 32K | support | Add Function Calling | Surpassing GPT3.5 |

| FFM-Mistral-7B | 7B | 32K | support | Add Function Calling | Surpassing GPT3.5 |

| FFM-Llama2-v2 | 70B / 13B / 7B | 4K | Added Traditional Chinese expanded vocabulary list | Add Function Calling | 70B surpasses GPT 3.5 |

| FFM-Llama2 | 70B / 13B / 7B | 4K | none | Not supported | 70B is close to GPT3.5. |

| Vector Model | Parameters | Context Length | Vector Dimension | Main supported languages | Model Evaluation |

|---|---|---|---|---|---|

| ⭐FFM-Embedding-v2.1 | 1B | 8K | 2,048 Customizable dimensions | Traditional Chinese/English/French/German/Italian/Spanish/Portuguese/Indian/Thai | Strengthening legal texts Chinese and British evaluations surpass OpenAI |

| FFM-Embedding-v2 | 1B | 8K | 2,048 Customizable dimensions | Traditional Chinese/English/French/German/Italian/Spanish/Portuguese/Indian/Thai | Chinese and British evaluations surpass OpenAI |

| FFM-Embedding | 1B | 2K | 1,536 | Traditional Chinese/English | — |

*For those who wish to use Llama3.1-FFM-405B, please inquire about FFM local licensing solutions.

Enterprise-level large model optimization solution

AFS Shuttle

Large-scale language model optimization for ride-sharing services

Low cost and rapid deployment: the best choice for proof of concept (POC).

Product Features

✓ Easy-to-use No-code Portal

✓ A variety of pre-trained models are available.

✓ Schedule programs based on customer needs and the total amount of readable tokens.

✓ National-level cybersecurity protection and tenant environment isolation

✓ It can be further integrated with secure network environments (including VPN).

✓ Offer a package at a fixed price to enterprise users to quickly obtain GPU resources for POC verification.

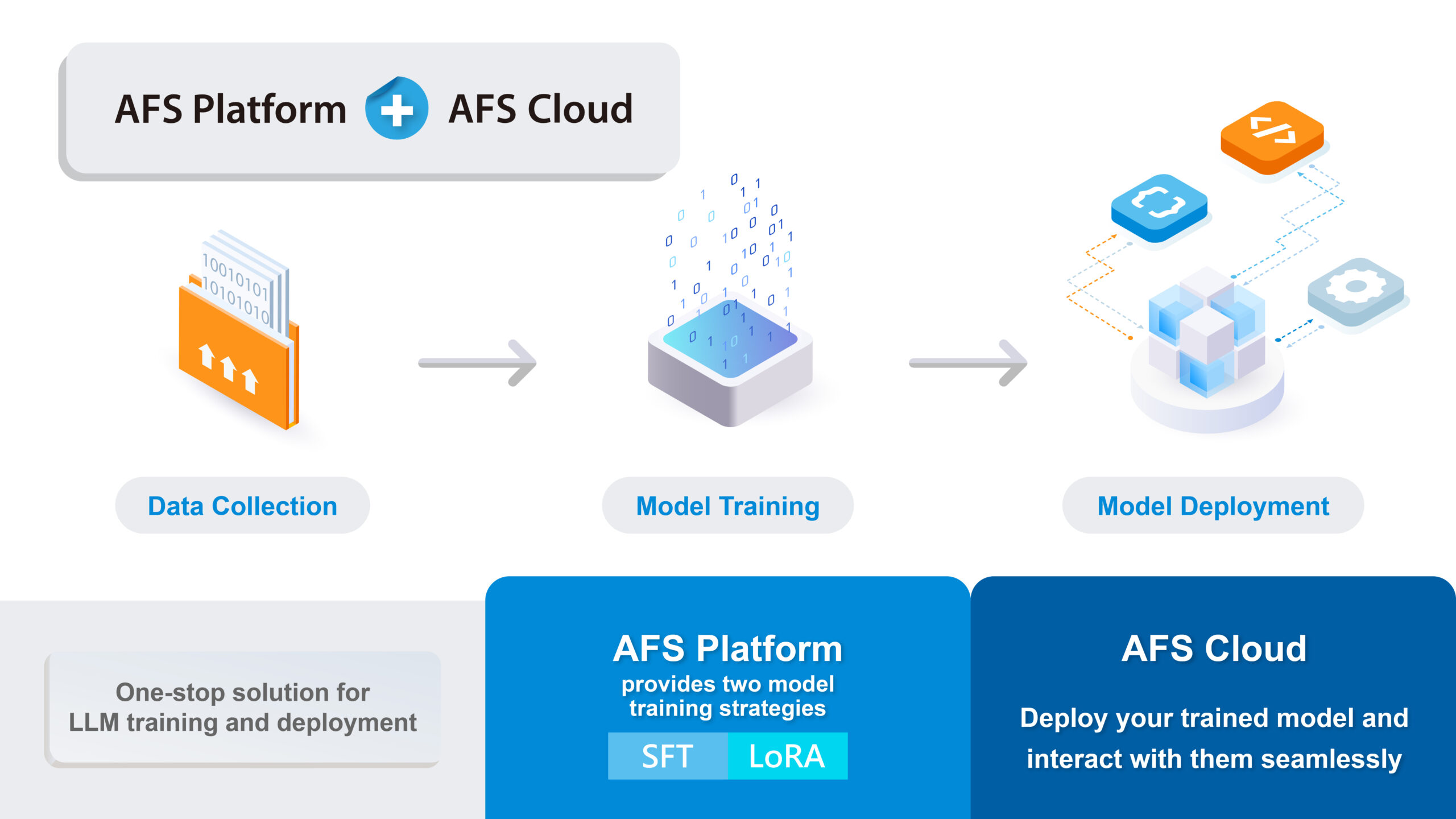

"AFS (AI Foundry Service)" provides a one-stop integrated service to help enterprises develop their own enterprise-grade generative AI models.

Fill out the form now for consultation

FFM Series

Llama 3.3-FFM

Llama 3.3-FFM boasts excellent Traditional Chinese and foreign language capabilities, and comprehensively enhances its Tool Call capabilities, enabling simultaneous invocation of multiple external tools with significantly improved accuracy compared to the native model, helping to create a highly efficient AI Agent!

Llama 3.2-FFM

The only one to support Llama 3.2 Traditional Chinese multimodal model! Based on Llama 3.2, it enhances Traditional Chinese capabilities, significantly improving image recognition (OCR), image understanding, and question answering abilities, making it the best choice for using Traditional Chinese multimodal models!

Llama 3.1-FFM

Llama 3.1-FFM not only boasts excellent Traditional Chinese and multilingual processing capabilities, but also enhances Tool Call accuracy and OpenAI API compatibility on a powerful model foundation, significantly expanding model capabilities and application areas!

Llama3-FFM

The first large-scale Traditional Chinese language model to surpass GPT-4.0 in the TMMLU+ benchmark. Trained on a high-quality Traditional Chinese corpus using Meta-Llama3, it boasts powerful reasoning capabilities and diverse application scenarios. Furthermore, the model features a Tool Call function, enabling rapid integration with external information to expand into comprehensive commercial applications.

FFM-Mistral

Train the Mistral AI open-source model with high-quality Traditional Chinese corpus to provide you with a localized and accurate interactive experience. FFM-Mixtral-8x7B is a hybrid expert model (MoE) architecture that can compute large amounts of parameters and data at low cost, outperforming GPT 3.5 in Traditional Chinese. With a native 32K Context Length, it can handle large amounts of text while maintaining inference accuracy.

FFM-Llama2-v2

The world's first enhanced Traditional Chinese version of the entire FFM-Llama 2 (70B/13B/7B) model series combines the excellent response methods and capabilities of the original Meta Llama 2, delivering stunning performance in reasoning and knowledge tests. v2 adds an expanded Traditional Chinese vocabulary, significantly improving reasoning efficiency and Traditional Chinese performance.

FFM-Embedding-v2

FFM-Embedding is a vector embedding model that enhances semantic search. It supports Traditional Chinese and eight other languages. The v2 series has been fully upgraded in terms of text length and vector dimensions, making information retrieval faster and semantics more accurate. Its performance in Chinese and English evaluations surpasses that of OpenAI Embedding. A legally enhanced version, v2.1, has also been released.

Free Consultation Service

Contact Taiwan AI Cloud experts to learn about and start using the solution that suits you.