ChatGPT suddenly appeared and became an overnight sensation, officially starting an arms race of large-scale language models among various countries. Companies such as Google, Baidu, and Alibaba have released their own versions of similar models, which can be used for more complex text tasks.

However, Lee Hung-yi, an associate professor in the Department of Electrical Engineering at National Taiwan University whose main research areas include machine learning, deep learning, semantic understanding, and speech recognition, pointed out that AI cannot simply be able to read text or perform speech recognition; it must understand all aspects of speech.

"AI needs to be able to recognize the emotions in a person's speech and then give different responses based on different emotions," said Li Hongyi. "Speech is the most natural way for people to communicate. Children can learn human language with almost no labeled data, and machines should be able to do the same thing."

Developing voice AI is far more difficult than developing text AI.

However, the complexity of voice AI is on a completely different level than that of text. Li Hongyi pointed out that because voice and text are not in a one-to-one relationship, it cannot simply be converted into text. There are many qualities in voice that text cannot express, including tone of voice, who is speaking, the background of the speaker, and even the speaker's health condition and age.

Take Google's pre-trained language model BERT (Bidirectional Encoder Representations from Transformers), proposed in 2018, as an example. Google simply let the model read a large amount of text (about 3.3 billion words of English novels and English Wikipedia text) and then use it to solve various tasks of different text types. The computing resources used were already amazing, let alone the more complex pre-trained speech model!

"For example, BERT uses a fill-in-the-blank approach, allowing machine learning to fill in the correct text. But you can't directly apply a similar approach to speech," said Li Hongyi. "Because the units of written characters are easy to distinguish. Taking Chinese as an example, one square character is one unit. But in speech, it's just a bunch of sound signals, and you simply don't know where a unit is."

Furthermore, compared to text, sound signals are much longer sequences, which means that processing speech AI requires far more computational resources. "For example, a 100-word article is a sequence of length 100," said Li Hongyi. "The average person can speak about 100 words per minute. Speech is usually represented in units of 0.01 seconds, with each 0.01 second represented by a vector. Therefore, one minute of speech signal requires 6,000 vectors to represent it."

Taizhi Cloud provides computing power to help National Taiwan University overcome bottlenecks.

The larger the amount of data, the more computing resources are needed. However, the computing resources in the National Taiwan University's laboratory at that time were very limited, and they could only use 1,000 hours of training data. Even so, it might still take a week to train a model, which was a huge burden for the National Taiwan University team.

However, Li Hongyi also pointed out that with more computing resources, the NTU team could likely achieve even better results. To encourage more people to develop such systems, Li Hongyi decided to launch the SUPERB (Speech Processing Universal Performance Benchmark) challenge starting in 2020. Participants would upload systems packaged with their trained foundational models, and the competition organizers would then add additional apps to these systems to evaluate their performance. However, this approach is extremely resource-intensive and requires the organizers to provide these resources, which is definitely not something that ordinary academic research institutions can afford.

Fortunately, Taiwan's AI-powered cloud services stepped in to help, successfully supporting the SUPERB competition. Companies such as Meta and Microsoft have also used the competition's data, demonstrating that SUPERB is now recognized worldwide as an assessment standard for self-supervised learning.

JSALT shines brightly

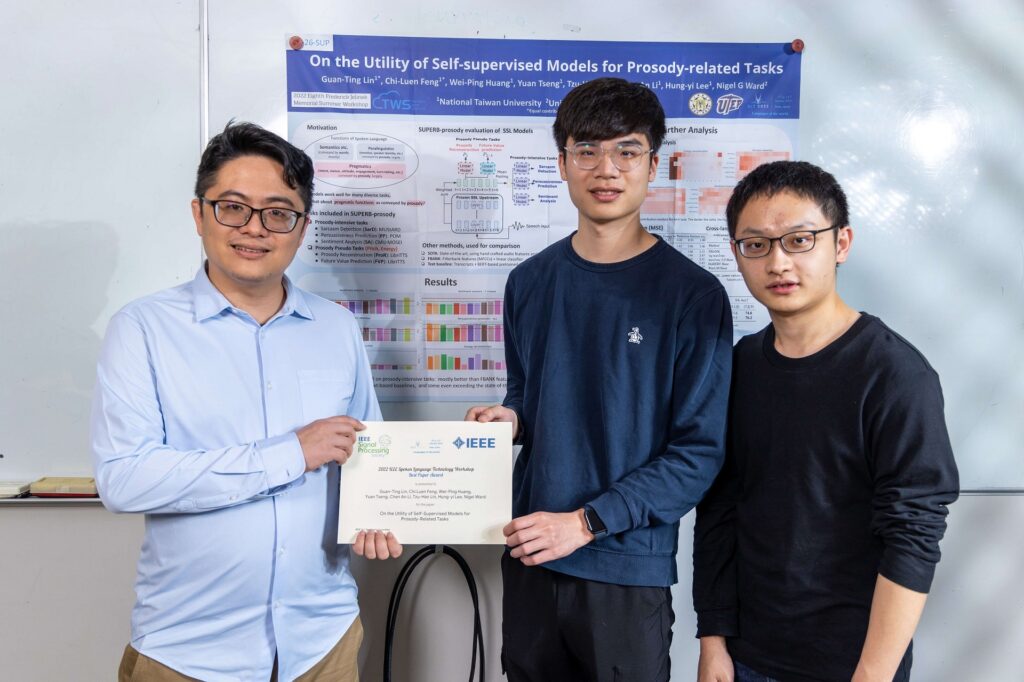

The NTU team then participated in JSALT (Eighth Frederick Jelinek Memorial Summer Workshop), one of the most important venues for releasing research results in the field of speech AI. Again, the computing resources were provided by Taizhi Cloud, allowing the NTU team to compete with world-class speech AI R&D teams.

Li Hongyi recalled that among the teams competing with the National Taiwan University team at the time, there were even teams supported by the European Union, and they even received free and unlimited computing resources from the French government. However, with the strong support of Taiwan Intelligence Cloud, the research results of the National Taiwan University team were still able to stand out.

"When the EU team said they used 100,000 GPU hours, the NTU team also used 100,000 GPU hours. It is clear that without Taizhiyun, we would not have been able to have the same level of computing resources as the EU team."Li Hongyi said, "Moreover, at the seminar, it's not enough to just say that it's theoretically feasible; we also have to demonstrate results. We used Taizhi Cloud remotely at Johns Hopkins University, and it was only at the end of the seminar that we were able to convince nearly a hundred scholars from all over the world that our idea had not only been realized, but that we had achieved the best results in the world at that time."

Lin Kuan-ting, a member of the NTU team and currently a doctoral student in the Department of Telecommunications at NTU, pointed out that the data and computing capacity that can be accommodated by using the TAIZHIYUN resources is several times that of the NTU laboratory. With the higher-end computing resources of TAIZHIYUN, the environment configuration can be larger, requiring 8 or 16 cards at a time. Many large experiments can be carried out freely, and good results can be achieved.

Another member, Shih Yi-jen, who will soon be studying at the University of Texas at Austin, also pointed out that after switching to Taizhi Cloud for computing resources, not only are there more GPUs available, but the storage space is also larger, allowing for the creation of up to a dozen models. This enables faster experimentation with various ideas, leading to further optimization and improvement.

Shih Yi-jen further pointed out that Taiwan Smart Cloud's storage space usage and billing are also more flexible. If the model is not that large, it is not necessary to open so many resources, and the budget can be effectively controlled according to the usage needs.

Recognizing the Importance of Computing Resources

"When we are doing research, we dare not do anything bigger once we are tied to the computing resources at hand," said Li Hongyi. "The model and the amount of data need to be large enough for the AI to have an epiphany during training. If there are not enough computing resources, the AI will take a very long time to learn."

The cross-node GPU usage service provided by Taizhi Cloud becomes particularly important at this time. "Suppose someone wants to run a model with 20 billion parameters, and the system can't run it, then they need to use cross-machines," said Li Hongyi. "If cross-nodes are not possible, you can only trade time for GPUs, but that wastes time. Development time is also a cost, and it's even a competition."

Lee Hung-yi urged all sectors to pay more attention to the importance of computing resources. "Computing resources are not something you can use to train AI simply by buying 10,000 GPUs," Lee said. "How to connect 10,000 GPUs so that they can all work; how to process the data if one of them fails so that the model doesn't break down—these all require advanced technology and deserve greater attention."

"In the era of AI 2.0, all industries will want to have their own similar models, but large language models cannot run on their own. At this time, they can use the services of Taizhi Cloud to do more things," said Li Hongyi.

![]() A highly secure and implementable enterprise-level generative AI solution: https://tws.twcc.ai/afs/

A highly secure and implementable enterprise-level generative AI solution: https://tws.twcc.ai/afs/