Photo Credit: INSIDE/Chris Photography

Taiwan AI Cloud integrates the technical capabilities of ASUS Cloud with the supercomputing expertise of the National Center for Computing Technology, taking over half of the production capacity of Taiwan's Sugi-2 supercomputer, becoming one of the earliest companies in Taiwan to have GPU computing power as its main focus.

Stepping into their headquarters in Tamsui, New Taipei City, INSIDE was intrigued. Taiwan Cloud had only been established for a little over four years, yet it had already participated in the construction of five of Taiwan's eight supercomputers that made it into the world's Top 500. Furthermore, it had transformed into a cloud computing company with a strong "local flavor," offering both hardware and software AI Agent services. How did they achieve this?

Starting a business by building a supercomputer

Although it is a subsidiary of ASUS Group, Kevin Lee, Chief Strategy Officer of Taiwan AI Cloud, carefully explained to us that the background of Taiwan AI Cloud's establishment was very special. Back in August 2019, ASUS was responsible for system development and integration. Taiwan's own supercomputer, "Taiwania 2," was completed and began commercial operation. At that time, Chen Liang-chi, then Minister of Science and Technology, began to have the idea of spinning off private enterprises in accordance with the Taiwan AI Action Plan.

This vision was officially realized at the end of February 2021. Taiwan AI Cloud integrated the technological capabilities of ASUS Cloud with the supercomputing expertise of the National Center for High-Performance Computing (NCHC), taking over half of the production capacity of the Taiwania 2 supercomputer, becoming one of the earliest companies in Taiwan to focus on GPU computing power. However, from policy incubation to market competition, Taiwan AI Cloud has also undergone many adjustments. "Just like a startup, it's been tough, and we gradually adjusted in many directions," said Kevin Lee. After several years of exploration, Taiwan AI Cloud restructured its business into three business centers, each with a clear market positioning and core technology.

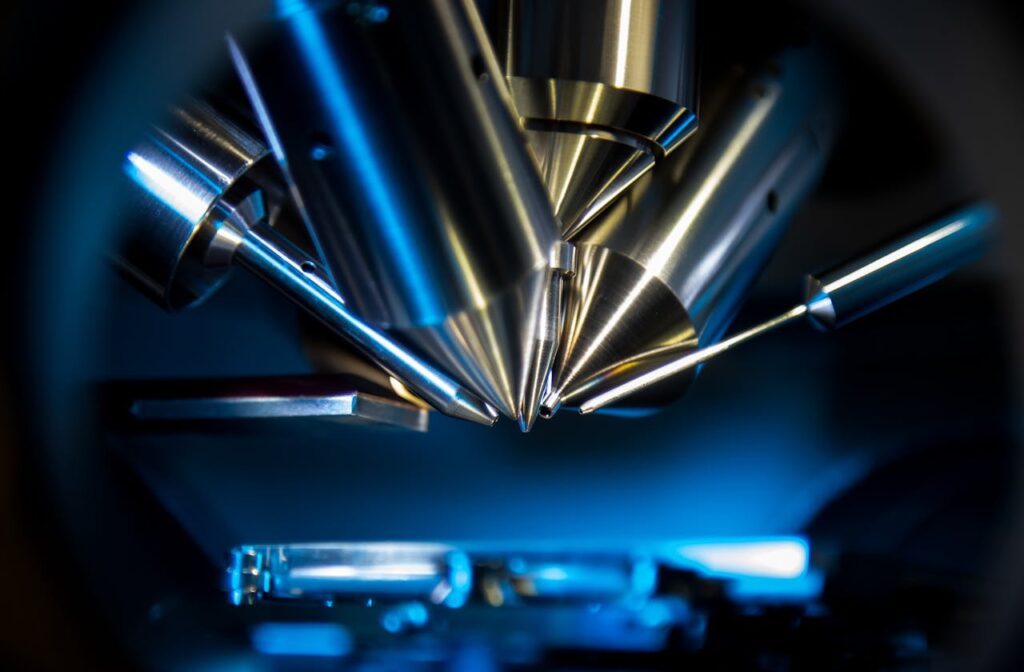

The first is TIS (Technology, Infrastructure, Services) of Taiwan AI Cloud. This part is obvious: it will continue the technological capabilities accumulated since 2018 and 2019, specializing in building AI HPC computing clusters based on GPUs. Kevin Lee said, "I think this group is the most outstanding in Taiwan in building AI supercomputers, and they should even be at the World Cup level."

The second division is the GPU Service Group (TGS) of AIoT (Artificial Intelligence of Cloud Computing). This part is a cloud service architecture that provides a complete cloud platform from CPU, GPU, and containers to model training and inference, primarily targeting SMEs and the cloud AI inference market. This division has a special background: Chen Liang-chi specifically instructed that its business "must be thought of from a technology-centric perspective," and that the cloud should not be treated as a mere water pipe. Therefore, TGS insists on using an open-source software architecture. Although the initial construction was difficult, the platform's SLA stability has now reached the international standard of 99.95%.

The third is the TCS Digital Consulting Service Center (TWSC Consulting Service), which is an integrated consulting business unit combining model manufacturing, AI agents, and process optimization. It assists enterprises in implementing intelligent solutions for real-world industry problems, forming a complete chain from underlying computing power to application implementation. "A year and a half ago, we realized that selling pure GPU computing power was really difficult to make money. It was only NT$58 per hour, less than the price of a bento box." Therefore, they turned to providing value-added services and offering end-to-end solutions.

Photo Credit: National Applied Research Laboratories

Photo Credit: National Applied Research Laboratories

▲Taiwan AI Cloud was founded at TAIWANIA 2 in Taiwan.

AI 1.0 and 2.0 are complementary.

When ChatGPT emerged at the end of 2022, the entire AI industry was shaken. Taiwan AI Cloud also faced the challenge of transitioning from machine learning-based AI 1.0 to generative AI 2.0. However, in Kevin Lee's view, these two generations of technology are not contradictory. "Strictly speaking, in AI 1.0, Taiwan AI Cloud didn't directly implement it on the end-user, because we were very clear that AI 1.0 was a very demanding industry," he recalled. Traditional AI required extensive data cleaning and labeling, as well as adapting to the ever-changing needs of customers on-site; it was a labor-intensive technical service.

However, the emergence of generative AI has changed the game. Taiwan AI Cloud discovered that AI 1.0 and AI 2.0 are actually complementary technical architectures. "You can leverage AI 2.0's application skills in text understanding and inference, and data processing, and then integrate existing AI 1.0 to achieve true speculative inference on the terminal; these two are mixed together."

Richard Yu, director of the Digital Foresight Lab at Taiwan AI Cloud, further explained the practical applications of this integration: "For example, the parking management system we developed basically uses version 1.0 for visual recognition, but there are flaws in the visual processing. For instance, there may be obstructions next to the parking space or misjudgments when a car passes by. At this time, the visual language model of generative AI can play a role in the backend, using the model to help clean up misjudgments."

This technology integration strategy gives Taiwan AI Cloud greater flexibility in responding to customer needs. "So far, all the implemented concepts are actually a combination of 1.0 and 2.0. Many end-users are still using AI 1.0 for prediction because AI 2.0 was initially a black box; it was difficult to explain why the parameters were output the way they were," Kevin Lee emphasized. He stressed that choosing the right technology to solve a specific problem is far more important than pursuing the latest technology. However, with the improvement of LLM Reasoning's reasoning capabilities, the proportion of AI 2.0 in practical applications will become increasingly important and pragmatic.

AI Agent should have a four-layer framework

With the gradual maturation of AI 2.0 technology, AI Agents are seen as intermediary technologies that connect enterprise processes and realize the value of AI. Before the concept of "Agent" became popular, Taiwan AI Cloud had already implemented related applications, using models combined with the design of internal enterprise operating processes to make AI a physical form of automated decision-making and operational execution.

Kevin Lee emphasized that AI Agents should not be viewed as a single technological tool, but rather understood within a four-layer framework: The first layer is the consulting stage, confirming site requirements, data feasibility, and process clarity; the second layer involves model training and contract development, establishing an industry operational knowledge system; the third layer involves the Agent executing process control and task response; and the fourth layer is the integration and application, and the final KPI performance evaluation. Through this architecture, the Agent is no longer merely a chatbot or co-pilot tool, but an operational role that can connect with industry know-how, is task-oriented, and provides real-value feedback.

In practical cases, Taiwan AI Cloud chose to start with the manufacturing industry. "Taiwan's manufacturing industry has the most complete processes and procedures, and there are definitely people who can explain them clearly." The most representative case is assisting the technology manufacturing industry in predicting the parameters of the epitaxial EPI process.

This process is similar to a coating concept, requiring layers to be stacked, up to hundreds of layers, with complex causal relationships between each layer. "We use a large language model to understand all the procedures, establishing a concept of a process expert system."

Photo Credit: Shutterstock / Dazhi Image

Photo Credit: Shutterstock / Dazhi Image

▲ Taiwan AI Cloud uses AI Agent to assist the technology industry in predicting EPI process parameters for epitaxial wafers. (Illustrative image)

In the past, this work relied entirely on the experience of senior engineers. They needed to select the closest past cases based on the new specifications and requirements, adjust the parameters, and then proceed to trial production for verification. "If it wasn't right, we had to start over, and the whole batch would have to be discarded. That's the cost, and the company's finance department is very concerned about this kind of thing."

Applications in the medical field showcase another aspect of AI agents. Richard Yu explained their clinical decision support system: "In a hospital, everyone plays a role—doctors, nurses, pharmacists, lab technicians—and they need to make collective decisions when facing difficult patients. We wondered, could multi-agent systems be used to create virtual decision-making systems?"

This system simulates various medical roles through different agents. "For example, the doctor agent has different settings for specialist or general internal medicine, the pharmacist agent will refer to 30,000 to 50,000 drug leaflets across Taiwan, and the medical laboratory technician agent needs to access multimodal data, including MRI, CT, and pathology reports." The ultimate goal is not to provide standard answers, but to provide decision support. "For example, when a patient has mixed symptoms of diabetes and kidney disease, are there any interactions that doctors might overlook during medication administration? After reviewing the visual pathology report, can the AI provide some predictions to assist doctors in their reference?"

The local "Formosa"

Behind all the above cases is the use of Taiwan AI Cloud's self-developed "Formosa Foundation Model," which covers various model scales from 8B, 13B to 70B, and has been adjusted for Traditional Chinese, social context, and local sensitivity. Kevin Lee said, "Open source is a necessary starting point, but practical applications require more localization and security governance."

However, the development of the Formosa model has also been full of challenges. "The first generation of Formosa was trained on BLOOM 176B. Back then, it was competing with GPT-3.5. We burned through over 100 million in computing power, but we sold zero units. It was too big and simply couldn't be implemented." After multiple iterations, the current Formosa model not only supports various scales, but has also been optimized for the needs of the Taiwan market.

Looking ahead, Taiwan AI Cloud has already foreseen the trend of AI gradually moving from the cloud to the edge. The company team is developing a "plug-and-play" agent service platform, allowing enterprises to deploy small models (such as 8B) on-premises and switch between models and agents according to the task. This, coupled with ASUS's ultra-compact desktop AI supercomputer, opens up AI deployment solutions that are affordable for SMEs. "With this solution, you can download a general LLM model specializing in text processing today, or switch to download a multimodal LLM model that includes image recognition. After each model is used, if you feel it's not good enough, you can replace it and download another model; it takes about ten minutes to switch models."

This plug-and-play concept reflects Taisun's assessment of market trends. "Next, all industries will move towards a more lightweight AI market, which is easier to deploy because GPUs are much cheaper." Coupled with the edge computing devices launched by ASUS, Taisun ultimately hopes to make AI applications more widespread and accessible.

[Source:]INSIDE】